|

|

Python |

|

|

|

EagleAutodesk

|

|

|

|

Autodesk Fusion 360Autodesk

|

|

|

|

Azure Machine VisionMicrosoft

|

Spiderman Spidey Sense and Webslinger

INTRODUCTION

Since I was six, I thought it would be cool to make my own web caster. Not knowing much then, I thought I could have an explosive shoot out fishing line with a suction cup on the end and it could do the trick. 3D printers were just getting somewhat affordable and we didn't have one at the time. So, the project idea was shelved.

Since then, my Dad and I have become Makers. It gave me a thought, what if in the Spider-Verse there was another character - say, 14 years old, only child, grew up with old motors and mechanical parts in the basement and electronics tools. He's accumulated two 3D printers and a welder. At 9, he started a Maker channel (Raising Awesome). His dad impulse bought a sewing machine on Prime Day, and THEN, at 14, he was bitten by the radioactive Maker bug...well arachnid. He was a Maker first - then got his Spidey powers. What would that character be like?

So, we dreamed up a Webslinger Gauntlet and Spidey-Sense Visual AI Circuit.

PROJECT DESIGN

Webslinger

The webslinger gauntlet houses a 16gram CO2 cartridge to use a burst of pressure to shoot out a hook that is tethered with Kevlar. No MCU is needed for this, just a valve like you find for inflating bike tires. It will has a motor in the gauntlet to retrack the Kevlar.

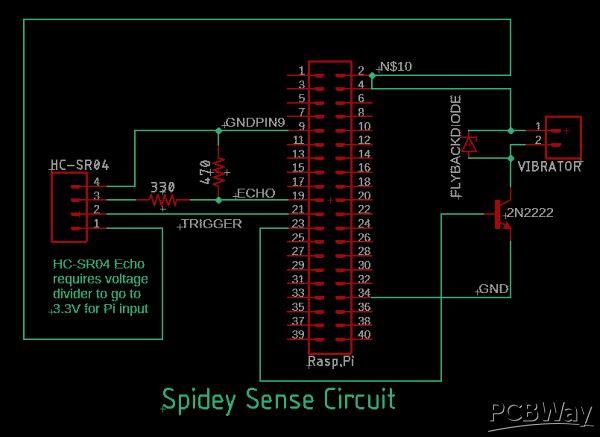

Spider-Sense

The camera & proximity sensor was sewn into the back of the shirt. The Raspberry Pi A+ served as a brain for the full suit, controlling all sensors and cameras within the suit. Along with that, we used a Pi SenseHat with its built in RGB display to change logos like when the "Spidey Sense" is triggered. With the timing of this contest, I was able to score one last Halloween costume.

You can find the model on our GitHub site: https://github.com/RaisingAwesome/Spider-man-Into-the-Maker-Verse/tree/master.

This is the code to trigger the RGB and vibration:

from sense_hat import SenseHat

import time

import RPi.GPIO as GPIO

# GPIO Mode (BOARD / BCM)

GPIO.setmode(GPIO.BCM)

# set GPIO Pins

GPIO_ECHO = 9

GPIO_TRIGGER = 10

GPIO_VIBRATE = 11

# set GPIO direction (IN / OUT)

GPIO.setup(GPIO_TRIGGER, GPIO.OUT)

GPIO.setup(GPIO_ECHO, GPIO.IN)

GPIO.setup(GPIO_VIBRATE, GPIO.OUT)

sense = SenseHat()

g = (0, 255, 0)

b = (0, 0, 255)

y = (255, 255, 0)

w = (255,255,255)

r = (204, 0, 0)

a1 = [

b, r, b, b, b, b, r, b,

b, r, b, b, b, b, r, b,

b, b, r, r, r, r, b, b,

b, b, b, r, r, b, b, b,

r, r, r, r, r, r, r, r,

b, b, b, r, r, b, b, b,

b, b, r, b, b, r, b, b,

b, r, b, b, b, b, r, b

]

a2 = [

b, b, r, b, b, r, b, b,

b, r, b, b, b, b, r, b,

b, b, r, r, r, r, b, b,

r, b, b, r, r, b, b, r,

b, r, r, r, r, r, r, b,

r, b, b, r, r, b, b, r,

b, b, r, b, b, r, b, b,

b, b, r, b, b, r, b, b

]

a3 = [

r, b, b, b, b, b, b, r,

b, r, b, b, b, b, r, b,

b, b, r, r, r, r, b, b,

r, b, b, r, r, b, b, r,

b, r, r, r, r, r, r, b,

r, b, b, r, r, b, b, r,

b, b, r, b, b, r, b, b,

b, r, b, b, b, b, r, b

]

def animate():

# dist is given in feet.

# speed is calculated by a linear equation y=mx+b where b=0 and m=.1

sense.set_pixels(a1)

time.sleep(.05*distance())

sense.set_pixels(a2)

time.sleep(.05*distance())

sense.set_pixels(a1)

time.sleep(.05*distance())

sense.set_pixels(a3)

time.sleep(.05*distance())

def distance():

# Returns distance in feet

StartTime = time.time()

timeout = time.time()

timedout = False

# set Trigger to HIGH to get the system ready

GPIO.output(GPIO_TRIGGER, True)

# set Trigger after 0.00001 seconds (10us) to LOW to send out a ping from the sensor

time.sleep(.00010)

GPIO.output(GPIO_TRIGGER, False)

# so we don't wait forever, set a timeout to break out if anything goes wrong.

while GPIO.input(GPIO_ECHO) == 0:

# if we don't get a response to let us know its about to ping, move on.

# the sensor should trigger, do its thing, and start reporting back in milliseconds.

StartTime = time.time()

if (time.time()>timeout+.025):

timedout=True

break

#print("Echo from low to high trap timed-out: ",timedout)

timeout = StartTime

StopTime=StartTime

while GPIO.input(GPIO_ECHO) == 1:

# if we don't get a bounce back on the sensor with the top of its range of detection, move on.

# Ultrasonic travels at the speed of sound, so it should pong back at least this

# fast for things within the top of its dectection range.

timedout=False

StopTime = time.time()

if (time.time()>timeout+.025):

timedout=True

break

#print("Echo from High back to Low timed-out: ",timedout)

# record the time it ponged back to the sensor

# time difference between start and arrival

TimeElapsed = StopTime - StartTime

# multiply with the sonic speed (34300 cm/s)

# and divide by 2, because it has to get there over the distance and back again

# then convert to feet by dividing all by 30.48 cm per foot

distance = (TimeElapsed * 17150)/30.46

#print("Distance: ",distance)

if (distance<.1):

distance=5

distance=round(distance)

if distance<5:

vibrate()

return distance

def vibrate():

#if something is very close, vibrate the spidey-sense

#code pending

GPIO.output(GPIO_VIBRATE, True)

time.sleep(.1)

GPIO.output(GPIO_VIBRATE, False)

# The next line will allow for this script to play stand alone, or you can

# import the script into another script to use all its functions.

if __name__ == '__main__':

try:

GPIO.output(GPIO_TRIGGER, False)

GPIO.output(GPIO_VIBRATE, False)

time.sleep(1)

while True:

animate()

# The next line is an example from the SenseHat library imported:

# sense.show_message("Sean Loves Brenda and Connor!!", text_colour=yellow, back_colour=blue, scroll_speed=.05)

# Handle pressing CTRL + C to exit

except KeyboardInterrupt:

print("\n\nSpiderbrain execution stopped.\n")

GPIO.cleanup()

Visual AI

If you've seen Spider-man: Homecoming, you'd know about the all new Stark branded AI, Karen, that Peter uses in his mask to assist him in missions. Karen was designed to be able to highlight threats and alert Peter of his surroundings, along with controlling many of his suit functions. While making an AI chatbot that responds with a voice and sense of emotion may not be the easiest thing to accomplish for this competition, we did think ahead to include a way to create this artificial "Spidey-Sense." We decided now would be a good time to take advantage of the surge in popularity of the Microsoft Azure, and the Machine Vision API provided by Microsoft.

We built a "see-in-the dark" solution with the Raspberry Pi Model A and a NoIR camera:

The Microsoft Computer Vision cloud service is able to analyze things in an image which is taken by the Raspberry Pi camera (aka my Pi-der cam) that is mounted to a belt. To activate this super sixth sense, I have to be very still. Once the accelerometer of the Sense Hat stabilizes, the picture is taken automatically. Using my cell phone's personal hot spot, the Azure API analyzes the image and the Raspberry Pi's eSpeak package let's me know through an earpiece. This allows the suit to be able to tell if a car is close behind me, or maybe an evil villain.

Python Visual AI for Microsoft Azure Machine Vision:

import os

import requests

from picamera import PiCamera

import time

# If you are using a Jupyter notebook, uncomment the following line.

# %matplotlib inline

import matplotlib.pyplot as plt

from PIL import Image

from io import BytesIO

camera = PiCamera()

# Add your Computer Vision subscription key and endpoint to your environment variables.

subscription_key = "YOUR KEY HERE!!!"

endpoint = "https://westcentralus.api.cognitive.microsoft.com/"

analyze_url = endpoint + "vision/v2.0/analyze"

# Set image_path to the local path of an image that you want to analyze.

image_path = "image.jpg"

def spidersense():

camera.start_preview()

time.sleep(3)

camera.capture('/home/spiderman/SpiderBrain/image.jpg')

camera.stop_preview()

# Read the image into a byte array

image_data = open(image_path, "rb").read()

headers = {'Ocp-Apim-Subscription-Key': subscription_key,

'Content-Type': 'application/octet-stream'}

params = {'visualFeatures': 'Categories,Description,Color'}

response = requests.post(

analyze_url, headers=headers, params=params, data=image_data)

response.raise_for_status()

# The 'analysis' object contains various fields that describe the image. The most

# relevant caption for the image is obtained from the 'description' property.

analysis = response.json()

image_caption = analysis["description"]["captions"][0]["text"].capitalize()

the_statement="espeak -s165 -p85 -ven+f3 \"Connor. I see " +\"" + image_caption +"\" --stdout | aplay 2>/dev/null"

os.system(the_statement)

#print(image_caption)

spidersense()

BUILD VIDEO

To see this all come together, here is our build video:

Spiderman Spidey Sense and Webslinger

*PCBWay community is a sharing platform. We are not responsible for any design issues and parameter issues (board thickness, surface finish, etc.) you choose.

Raspberry Pi 5 7 Inch Touch Screen IPS 1024x600 HD LCD HDMI-compatible Display for RPI 4B 3B+ OPI 5 AIDA64 PC Secondary Screen(Without Speaker)

BUY NOW

ESP32-S3 4.3inch Capacitive Touch Display Development Board, 800×480, 5-point Touch, 32-bit LX7 Dual-core Processor

BUY NOW

Raspberry Pi 5 7 Inch Touch Screen IPS 1024x600 HD LCD HDMI-compatible Display for RPI 4B 3B+ OPI 5 AIDA64 PC Secondary Screen(Without Speaker)

BUY NOW- Comments(0)

- Likes(5)

Log in to post comments.

Log in to post comments.

-

Engineer

Sep 29,2024

Engineer

Sep 29,2024

-

LUIS EMILIO LOPEZ

Feb 12,2024

LUIS EMILIO LOPEZ

Feb 12,2024

-

Engineer

Jun 25,2021

Engineer

Jun 25,2021

-

Engineer

Jun 25,2021

Engineer

Jun 25,2021

-

Alex Soyovan

Jun 23,2021

Alex Soyovan

Jun 23,2021

- 3 USER VOTES

- YOUR VOTE 0.00 0.00

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

-

1design

-

1usability

-

1creativity

-

1content

-

8design

-

9usability

-

9creativity

-

9content

-

9design

-

8usability

-

9creativity

-

10content

More by Sean Miller

More by Sean Miller

-

World's First Published Open-source Arduino Nano RP2040 Connect Drone Flight Controller

INTRODUCTIONThe Arduino Nano RP2040 Connect comes with a built in IMU. It's fast speed and ability t...

World's First Published Open-source Arduino Nano RP2040 Connect Drone Flight Controller

INTRODUCTIONThe Arduino Nano RP2040 Connect comes with a built in IMU. It's fast speed and ability t...

-

Spiderman Spidey Sense and Webslinger

INTRODUCTIONSince I was six, I thought it would be cool to make my own web caster. Not knowing much ...

Spiderman Spidey Sense and Webslinger

INTRODUCTIONSince I was six, I thought it would be cool to make my own web caster. Not knowing much ...

-

LaserCutter_PCB_2022-02-27

GRBL shield for the Arduino Uno.Designed to allow wiring up your motors and separate motor drivers. ...

LaserCutter_PCB_2022-02-27

GRBL shield for the Arduino Uno.Designed to allow wiring up your motors and separate motor drivers. ...

-

IoT Smart Desk Distance and KPI Meter

HTTPS://WWW.RAISINGAWESOME.SITEINTRODUCTIONThis IoT project was born out of the uncertain times pres...

IoT Smart Desk Distance and KPI Meter

HTTPS://WWW.RAISINGAWESOME.SITEINTRODUCTIONThis IoT project was born out of the uncertain times pres...

-

-

Commodore 64 1541-II 1581 Floppy Disk Drive C64 Power Supply Unit USB-C 5V 12V DIN connector 5.25

267 1 3 -

Easy to print simple stacking organizer with drawers

105 0 0 -

-

-

-

-

-

-

Modifying a Hotplate to a Reflow Solder Station

1190 1 6 -

MPL3115A2 Barometric Pressure, Altitude, and Temperature Sensor

673 0 1 -